A/B testing transforms guesswork into data-driven decisions by comparing two versions to see which truly performs better. Understanding how to design, run, and analyse these tests empowers you to boost conversions effectively. With practical strategies and real examples, you can master A/B testing to optimise user experience and maximise results without unnecessary risks or wasted effort.

Essential guide to A/B testing: What it is, why it matters, and how to get started

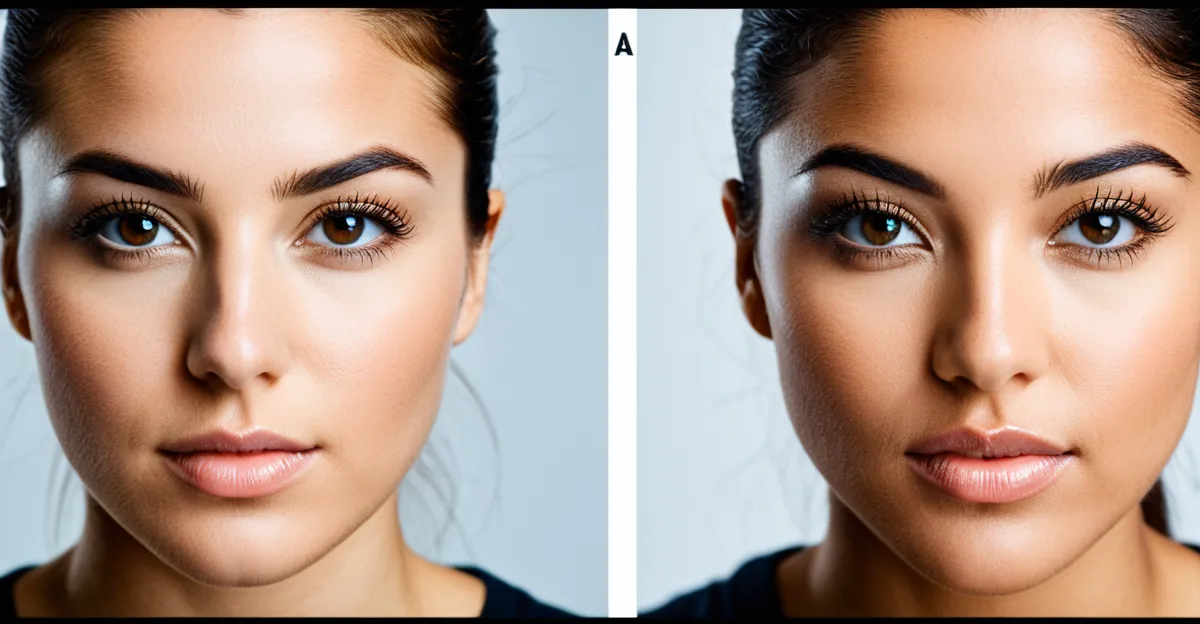

The foundation of effective digital optimization starts with understanding A/B testing, also called split or bucket testing. For anyone seeking practical advice to evolve their marketing or product strategy, learn a/b testing on kameleoon.com to get a detailed view of this core methodology. A/B tests work by dividing users into randomized groups: one sees the original experience (the “control”), the other sees a variation. By comparing the measured outcomes—like click rates or conversions—between these groups, teams discover what genuinely works. This rigorous approach avoids guesswork and gives a clear edge, supporting smarter, data-driven decisions at scale.

Additional reading : What benefits does machine learning bring to UK marketing analytics?

Split testing and multivariate experiments extend the process, allowing for comparison of multiple versions or changes across several elements at once. When more than two variables are tested, complexity rises, but so does the insight, making it essential for advanced campaigns and product development. Key in all methods is the use of statistical significance: results must be strong enough to prove changes aren’t random. Common statistical approaches—Z-tests, t-tests, and Bayesian inference—provide confidence levels and lift calculations, guiding teams to valid conclusions and supporting incremental optimization.

Formulating successful split tests begins with a well-structured hypothesis—an evidence-based prediction of what you expect to change and why. Examples include predicting higher sign-up rates from a shorter checkout form, or greater engagement from improving a call-to-action button color. After defining your hypothesis, segment audiences as needed (such as new vs. returning users) and set a primary metric for success, like converted sales or reduced bounce rates.

Also to discover : Unlock powerful a/b testing strategies to boost conversions

A clear test design and planning phase outlines the duration, minimum sample size (crucial for validity), and controls for bias. Calculation tools and planning frameworks ensure you gather enough data, run your test long enough, and correctly interpret outcomes. Data science practitioners leverage techniques like CUPED or machine learning for variance reduction or more nuanced audience targeting.

Efficient teams maintain a repeatable experimentation calendar, running tests sequentially or in parallel when segmenting traffic to avoid overlap. The end goal: build a culture of continual improvement, where hypothesis-driven testing guides all digital updates, and every decision is supported by verifiable data-driven insights.

Methods, frameworks, and real-world impact of A/B testing

Split test strategies form the backbone of user experience experiments and conversion rate optimization methods. By meticulously separating a control group vs test group, teams create strong conditions for hypothesis-driven testing and sample size calculation for experiments. Precision in test and control statistical analysis is mandatory, as errors in this stage impact interpretation of test results and usefulness of optimization through experimentation.

Split testing, multivariate, and multipage methodologies: Their uses and limitations

Multivariate experiment basics advance beyond split testing by allowing assessment of combined element changes. While split test strategies focus on one variable at a time, multivariate methodologies can reveal the interplay between features, at the cost of increased complexity in significance testing formulas and sample size calculation for experiments. Careful test duration planning is needed, especially as analyzing multiple variables demands more users for reliable outcomes.

Practical frameworks for executing A/B experiments

Two statistical frameworks dominate: Frequentist and Bayesian. Frequentist methods remain popular for hypothesis creation for tests and significance testing formulas. Bayesian testing methods, meanwhile, provide ongoing probability updates, supporting more agile conversion rate optimization methods. Selection depends on available expertise, traffic, and resources for experiment analytics and reporting.

Case studies: Social media, e-commerce, SaaS, and political campaigns

Real-world cases demonstrate split test strategies driving measurable change. Social media marketers test calls-to-action for engagement spikes; SaaS firms employ multivariate experiment basics to tune onboarding flows; e-commerce brands make product page tweaks to test lift in A/B testing; political campaigns test-and-control to optimize donation forms. Each scenario highlights the benefits and interpretive demands of structured hypothesis-driven testing and test result interpretation tips.

Tools, platforms, and implementation strategies for effective experiments

Overview and comparison of leading A/B testing tools and experimentation software

Precision in experimentation starts with using robust split testing tools. Leading platforms such as Google Optimize, Optimizely, and VWO are designed to streamline the A/B testing process and support conversion rate optimization methods. Google Optimize features a tightly integrated workflow with Google Analytics for seamless test setup and experiment analytics and reporting. Optimizely stands out for its flexibility in experiment targeting and its developer-friendly options. VWO testing capabilities include advanced segment personalization and a visual editor ideal for marketers exploring iterative changes without developer support. Comparing these platforms involves evaluating automation in testing, ease of use, and how each one supports multivariate experiment basics and user segmentation strategies.

Integrating A/B testing with analytics stacks and optimization workflows

Connecting popular experimentation software with analytics stacks maximizes insights. Integration with Google Analytics boosts unified data tracking for test and control statistical analysis. Automation in testing is now standard, letting teams run full funnel testing approaches and ensure continuous testing methodologies. Real-time feedback and experiment analytics and reporting empower marketers to rapidly validate hypotheses and adjust experiments for maximum conversion lift.

Tips for experiment planning, audience segmentation, and scaling tests across channels

Effective split test strategies begin with sample size calculation for experiments, informed by free testing calculators and data analysis for split tests. Employing dynamic content testing and robust user segmentation strategies allows tests to scale across digital marketing split testing scenarios, including email campaign optimization, mobile app split testing, and ecommerce personalization testing. Planning with automation enables consistent experiment documentation and real-time scalability, enhancing team collaboration for experiments and ensuring insightful, actionable results across touchpoints.

Best practices, common mistakes, and optimization beyond the basics

Best-in-class testing protocols and data governance

Split testing best practices demand strict attention to randomization, especially with user segmentation strategies in ecommerce A/B testing strategies. Ensuring control group vs test group assignments are unbiased and data is treated consistently keeps experiment result validation trustworthy. Optimization through experimentation is only reliable when experiments are documented, metrics pre-defined, and data privacy protected within a robust data governance framework. Marketers integrating test result interpretation tips into their workflow benefit from transparent testing in UX design and audit-ready records.

Frequent mistakes and pitfalls that undermine statistical validity

Common testing errors to avoid include running tests for too short a duration, testing with inadequate sample sizes, and failing to monitor for external factors. These mistakes often result in statistical significance being misjudged. Relying on unvalidated experiment analytics and ignoring proper experiment result validation skews interpretations. Always check segmentation, as improper user segmentation strategies can inadvertently stack ecommerce A/B testing strategies in favor of one variant.

Beyond basics: Iterative testing, continuous experimentation, and building a culture of evidence-based decisions

Engaging in optimization through experimentation means viewing experimentation as ongoing. Teams should cycle through real-world examples of tests, utilizing test result interpretation tips for each round. Continuous learning—bolstered by real-world ecommerce A/B testing strategies—builds institutional knowledge. Integrating split testing best practices and encouraging hypothesis-driven UX design experimentation ensures data-driven momentum beyond initial successes.