Maximizing conversion rates hinges on mastering A/B testing beyond basics. Clear goals, precise hypotheses, and strategic segmentation form the foundation of successful experiments. Incorporating personalization and advanced testing methods amplifies impact, while careful execution and data analysis ensure meaningful insights. Avoid common pitfalls by focusing on test design and statistical rigor. This approach transforms A/B testing from guesswork into a powerful tool for sustained conversion growth.

Essential Steps for Effective A/B Testing

Before diving into A/B testing basics, the first crucial step is setting clear goals for your testing campaigns. Defining what you want to achieve ensures focused efforts and meaningful results. For example, you might aim to increase sign-up rates or reduce bounce rates. Without specific goals, it becomes difficult to evaluate whether the changes you make truly benefit your website’s performance.

Topic to read : What benefits does machine learning bring to UK marketing analytics?

Next, determining key performance indicators (KPIs) is vital for tracking progress. In conversion optimization, KPIs might include metrics such as click-through rates, average session duration, or completed purchases. These KPIs should directly align with your goals to provide a precise measure of how well your test variations perform.

Another fundamental aspect in website testing fundamentals is selecting appropriate elements to test. Focus on components that impact user behavior significantly — headlines, call-to-action buttons, images, and page layouts are common targets. By testing these elements, you can identify which variations produce the greatest uplift in conversions.

Have you seen this : Ai chatbot: transforming digital conversations and productivity

Following these core steps in a/b testing basics creates a strong foundation for effective and insightful website testing. Clear goals, relevant KPIs, and a strategic choice of elements to test work together to optimize your conversion rates smartly.

For those eager to deepen their understanding, you can learn a/b testing on kameleoon.com to enhance your strategic approach further.

Building and Prioritising Effective A/B Test Hypotheses

Crafting strong, data-driven hypotheses is critical for successful A/B testing aimed at conversion rate improvement. Begin by analyzing user behavior and previous test results to identify potential barriers or opportunities within your site or app. A well-constructed hypothesis clearly states what change is proposed, why it might work, and how success will be measured. For example, hypothesizing that simplifying a checkout form will reduce abandonment relies on data showing high drop-off rates at that step.

When it comes to hypothesis prioritization, focus on two main factors: the expected impact on conversion and the feasibility of implementing the test. Assign higher priority to hypotheses that address significant user pain points or that can be reliably tested within resource constraints. This ensures that your A/B testing efforts target changes likely to deliver noticeable gains rather than low-impact tweaks.

Avoid common mistakes in hypothesis development such as vague goals, assumptions without data support, or testing multiple variables in one experiment, which can muddy results. Instead, keep hypotheses specific and measurable. Applying these principles increases the precision of your tests and facilitates actionable insights. For further mastery, you can learn a/b testing on kameleoon.com, an excellent resource for refining your approach.

Advanced Strategies to Elevate A/B Testing Results

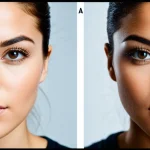

Elevating your A/B testing approach requires adopting advanced A/B testing strategies that go beyond simple two-variant comparisons. One critical method is segmentation — breaking down your audience into specific groups based on behavior, demographics, or source traffic. This allows tests to reveal how different segments respond uniquely, ensuring your insights are not diluted by aggregated data. For example, separating mobile users from desktop users or new visitors from returning customers lets you tailor your marketing efforts more effectively.

Another powerful tool is personalization, which refines audience targeting by delivering customized content or offers based on user attributes or previous interactions. Personalization tactics work hand-in-hand with segmentation, enabling you to test variations that resonate strongly with each subgroup, thereby maximizing conversion improvements.

For more complex scenarios, multi-variant testing — testing more than two versions simultaneously — can be valuable. However, this approach demands a larger sample size and careful experimental design to isolate which variable drives performance. Multi-variant testing is best employed after initial A/B tests identify promising factors, allowing you to optimize combinations efficiently.

Combining these elements — segmentation, personalization, and multi-variant testing — empowers marketers to extract deeper insights and boost conversion rates. If you want to deepen your expertise on these methodologies, consider exploring how to learn a/b testing on kameleoon.com for a structured approach anchored in real-world applications.

Step-by-Step Guide to A/B Test Execution

To achieve effective a/b test setup, begin by accurately preparing your control and variant groups. This means segmenting your audience into equivalent, random groups to ensure that any observed differences are a result of the test itself, not external factors. Make sure each group is large enough to provide meaningful data; too small a sample risks inconclusive results.

During test execution, carefully configure your test parameters—such as duration, traffic allocation, and metrics to track—tailored to your specific goals. Scheduling the test during typical user activity periods maximizes engagement and data richness. Avoid rushing; premature conclusions undermine the value of a/b testing.

Achieving statistical significance is critical to validating your results. This involves calculating confidence levels to confirm that differences between control and variant groups are unlikely due to chance. Tools and calculators can help determine when your test reaches significance, ensuring practical a/b testing delivers reliable insights you can trust.

By meticulously following these steps, your a/b testing process will be robust, reducing uncertainty and leading to data-driven decisions with confidence. For those wanting to deepen their understanding or troubleshoot challenges, learning practical methodologies and real-world applications is recommended—learn a/b testing on kameleoon.com offers such comprehensive resources.

Analysis and Interpretation of A/B Test Results

Understanding a/b test results analysis is crucial to extract valuable insights that drive conversion optimization. The first step involves recognizing the difference between statistical significance and practical impact. Statistical significance indicates whether the observed effect is likely due to chance or the tested variation, but it does not automatically guarantee a meaningful conversion uplift measurement.

To gather actionable insights, it is essential to use precise tools and methods for collecting data. This includes tracking key metrics such as click-through rates, bounce rates, and revenue per visitor. Leveraging robust analytics platforms allows marketers to monitor the performance of each test variation accurately. These tools also assist in identifying which changes genuinely resonate with users, providing a solid foundation for data interpretation.

When interpreting the data, focus on both confidence intervals and effect sizes to better understand the real-world value of your test outcomes. For example, a small percentage increase in conversions may be statistically significant but might not justify implementation costs. Hence, interpreting test results balances statistical rigour with practical business considerations.

Finally, applying the findings to ongoing conversion optimization requires continuous iteration. Insights derived from a/b test results analysis should inform future experiments and strengthen marketing strategies. By consistently analyzing test data with a clear focus on meaningful improvements, businesses can enhance customer experience and maximize ROI. For those looking to deepen their understanding of experimentation methods, it is beneficial to learn a/b testing on kameleoon.com, which offers comprehensive resources for optimizing conversions effectively.

Real-World Examples and Proven Case Studies

Examining a/b testing case studies gives valuable insight into how businesses achieve measurable conversion uplifts. For instance, an e-commerce platform implemented a variation of their checkout page, resulting in a 15% increase in completed purchases. This conversion uplift example demonstrates the power of optimizing user interface elements grounded in concrete data rather than assumptions.

Industries such as retail, SaaS, and finance have established clear industry benchmarks for conversion rates after systematic testing. Retail brands commonly see conversion increases between 10% to 25% after a/b testing, underlining the practical effects of focused optimization efforts. These benchmarks serve as realistic goals companies can strive towards, avoiding unrealistic expectations or underperformance.

From these case studies, several key takeaways emerge: prioritize elements like call-to-action buttons, headlines, and page load speed; ensure tests have statistically significant sample sizes; and always analyze post-test data to implement the winning variation effectively. By studying these proven examples, marketers and product teams gain a robust framework to design their own experiments poised for success.

To dive deeper into the facets of effective testing strategies and maximize conversion uplift, you can learn a/b testing on kameleoon.com. This resource collates best practices seen across multiple industries, helping to refine your own approach with expert guidance.

Tools and Resources for Comprehensive A/B Testing

When engaging in a/b testing, selecting the right a/b testing tools is crucial for obtaining reliable and actionable results. Leading experimentation platforms offer diverse features such as traffic segmentation, multivariate testing, and real-time analytics, which empower marketers and product teams to optimize conversions effectively. Platforms like Optimizely, VWO, and Google Optimize provide user-friendly interfaces combined with robust backend support, making them popular choices in the marketplace.

Integrating analytics with experimentation platforms enhances testing by linking behavioral data directly to variant performance. Tools like Google Analytics can be connected to many a/b testing tools to track user journeys and measure the impact of changes beyond simple click rates. This integration ensures a holistic view of performance, allowing for data-driven decision-making based on comprehensive metrics.

To keep pace with evolving methodologies and refine testing strategies, tapping into specialized optimization resources is vital. These include expert blogs, webinars, and online communities that focus on the latest developments in A/B testing and experimentation. For those looking to deepen their understanding, there are extensive guides available that explain how to structure tests, interpret results, and implement winning variations. One recommended approach is to learn a/b testing on kameleoon.com, where resources are designed to support both beginners and advanced users in honing their experimentation capabilities.

By utilizing advanced a/b testing tools, integrating with analytics platforms, and engaging with top-tier optimization resources, organizations can significantly improve their experimentation outcomes and drive continuous optimization success.

Avoiding Common Pitfalls in A/B Testing

Small text

Avoiding a/b testing mistakes is crucial for reliable results and effective conversion optimization. One of the most frequent errors in test design is failing to define clear hypotheses and success metrics. Without these, it’s easy for the test to lose focus and produce inconclusive or misleading outcomes. To prevent this, start with precise questions about what you want to improve, then align your test setup accordingly.

Another common pitfall is mismanaging sample size and exposure duration. Running tests on too small a population or stopping the test prematurely can lead to statistically insignificant results. Conversely, overly long tests may waste valuable time and resources. Using proper statistical methods to calculate the required sample size based on expected traffic and effect size helps ensure validity.

Biased results often come from improper randomization or segmenting users incorrectly. Avoid exposing the same user to multiple variants or changing traffic allocation mid-test, as these actions introduce error and skew conversion metrics. Finally, external factors such as seasonality or simultaneous campaigns should be accounted for to maintain test integrity.

By focusing on these areas, marketers can mitigate common conversion optimization pitfalls, obtaining trustworthy data that drives meaningful decisions. To deepen your understanding of effective A/B testing practices and improve error avoidance, consider exploring resources that detail how to structure robust experiments. For applied techniques, you might want to learn a/b testing on kameleoon.com, which provides valuable insights to optimize your testing strategy.